Section: New Software and Platforms

Platforms

|

Robot Vision Platform

Participant : Fabien Spindler [contact] .

We exploit two industrial robotic systems built by Afma Robots in the nineties to validate our researches in visual servoing and active vision. The first one is a 6 DoF Gantry robot, the other one is a 4 DoF cylindrical robot (see Fig. 2.a). These robots are equipped with cameras. The Gantry robot also allows embedding grippers on its end-effector.

We are also using a haptic Virtuose 6D device from Haption company (see Fig. 2.b). This device is used as master device in many of our shared control activities (see Sections 9.3.1.3, 7.3.3, and 7.3.4).

Note that eight papers published by Lagadic in 2017 enclose results validated on this platform [35], [37], [15], [63], [58], [48], [51], [52].

|

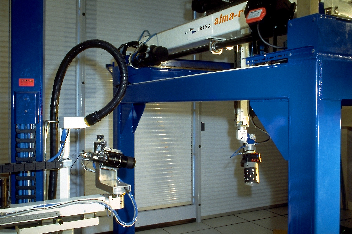

Mobile Robots

Participants : Fabien Spindler [contact] , Marie Babel, Patrick Rives.

Indoor Mobile Robots

For fast prototyping of algorithms in perception, control and autonomous navigation, the team uses Hannibal in Sophia Antipolis, a cart-like platform built by Neobotix (see Fig. 3.a), and, in Rennes, a Pioneer 3DX from Adept (see Fig. 3.b). These platforms are equipped with various sensors needed for SLAM purposes, autonomous navigation, and sensor-based control.

Moreover, to validate the researches in personally assisted living topic (see Section 7.5.3), we have three electric wheelchairs in Rennes, one from Permobil, one from Sunrise and the last from YouQ (see Fig. 3.c). The control of the wheelchair is performed using a plug and play system between the joystick and the low level control of the wheelchair. Such a system lets us acquire the user intention through the joystick position and control the wheelchair by applying corrections to its motion. The wheelchairs have been fitted with cameras and ultrasound sensors to perform the required servoing for assisting handicapped people.

Note that five papers exploiting the indoors mobile robots were published this year [15], [30], [31], [53], [60].

Outdoor Vehicles

A camera rig has been developed in Sophia Antipolis. It can be fixed to a standard car (see Fig. 4), which is driven at a variable speed depending on the road/traffic conditions, with an average speed of 30 km/h and a maximum speed of 80 km/h. The sequences are recorded at a frame rate of 20 Hz, whith a synchronization of the six global shutter cameras of the stereo system, producing spherical images with a resolution of 2048x665 pixels (see Fig. 4). Such sequences are fused offline to obtain maps that can be used later for localization or for scene rendering (in a similar fashion to Google Street View) as shown in the video http://www-sop.inria.fr/members/Renato-Jose.Martins/iros15.html.

|

||||

|

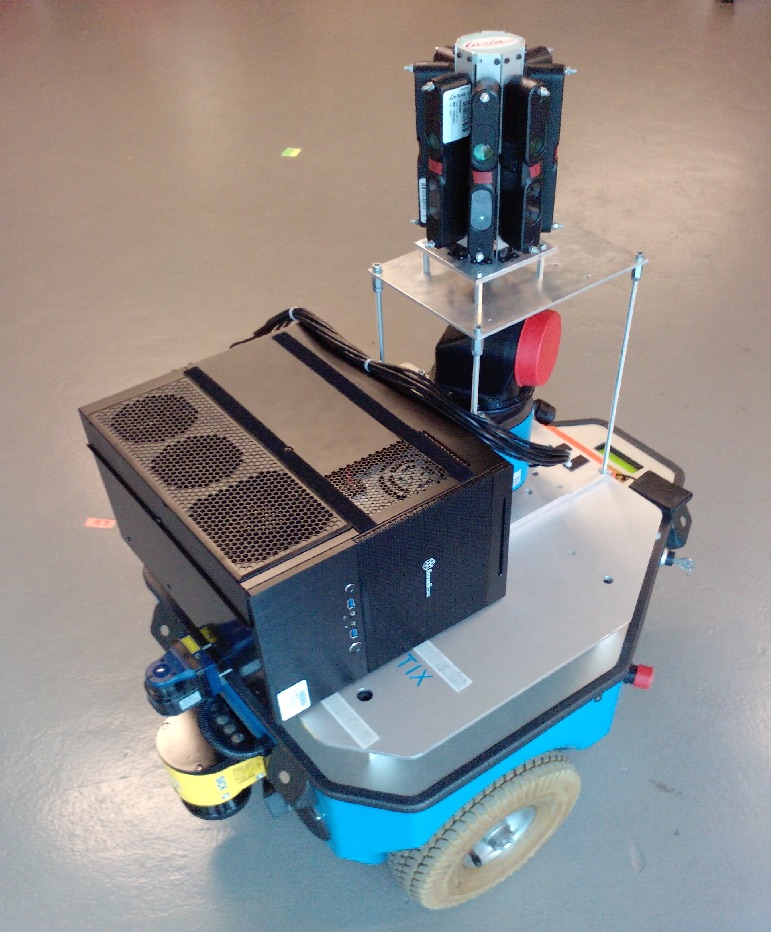

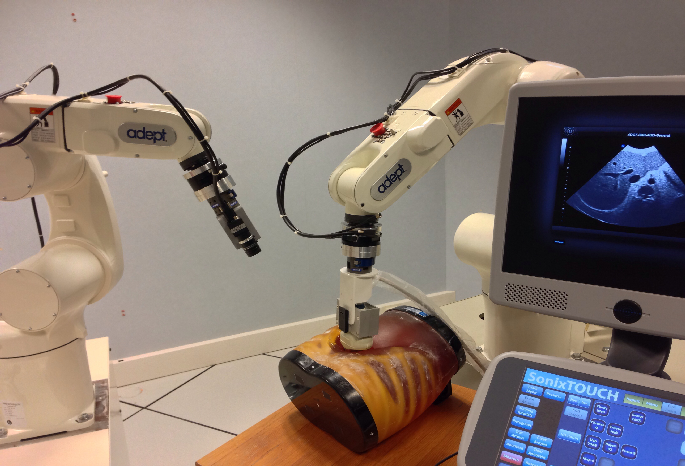

Medical Robotic Platform

Participants : Marc Pouliquen, Fabien Spindler [contact] , Alexandre Krupa.

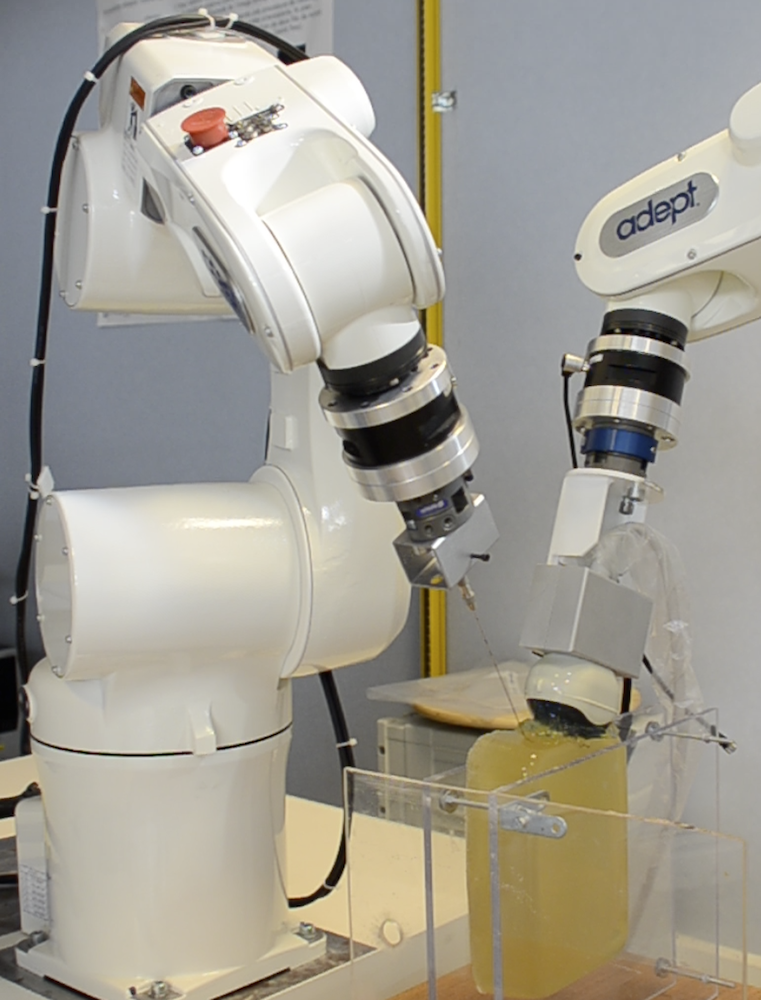

This platform is composed by two 6 DoF Adept Viper arms (see Fig. 5.a). Ultrasound probes connected either to a SonoSite 180 Plus or an Ultrasonix SonixTouch imaging system can be mounted on a force torque sensor attached to each robot end-effector. The haptic Virtuose 6D device (see Fig. 2.b) can also be used within this platform.

This testbed is of primary interest for researches and experiments concerning ultrasound visual servoing applied to probe positioning, soft tissue tracking, elastography or robotic needle insertion tasks (see Section 7.3).

Note that seven papers published this year include experimental results obtained with this platform [56], [57], [72], [33], [19], [48], [37]

|

Humanoid Robots

Participants : Giovanni Claudio, Fabien Spindler [contact] .

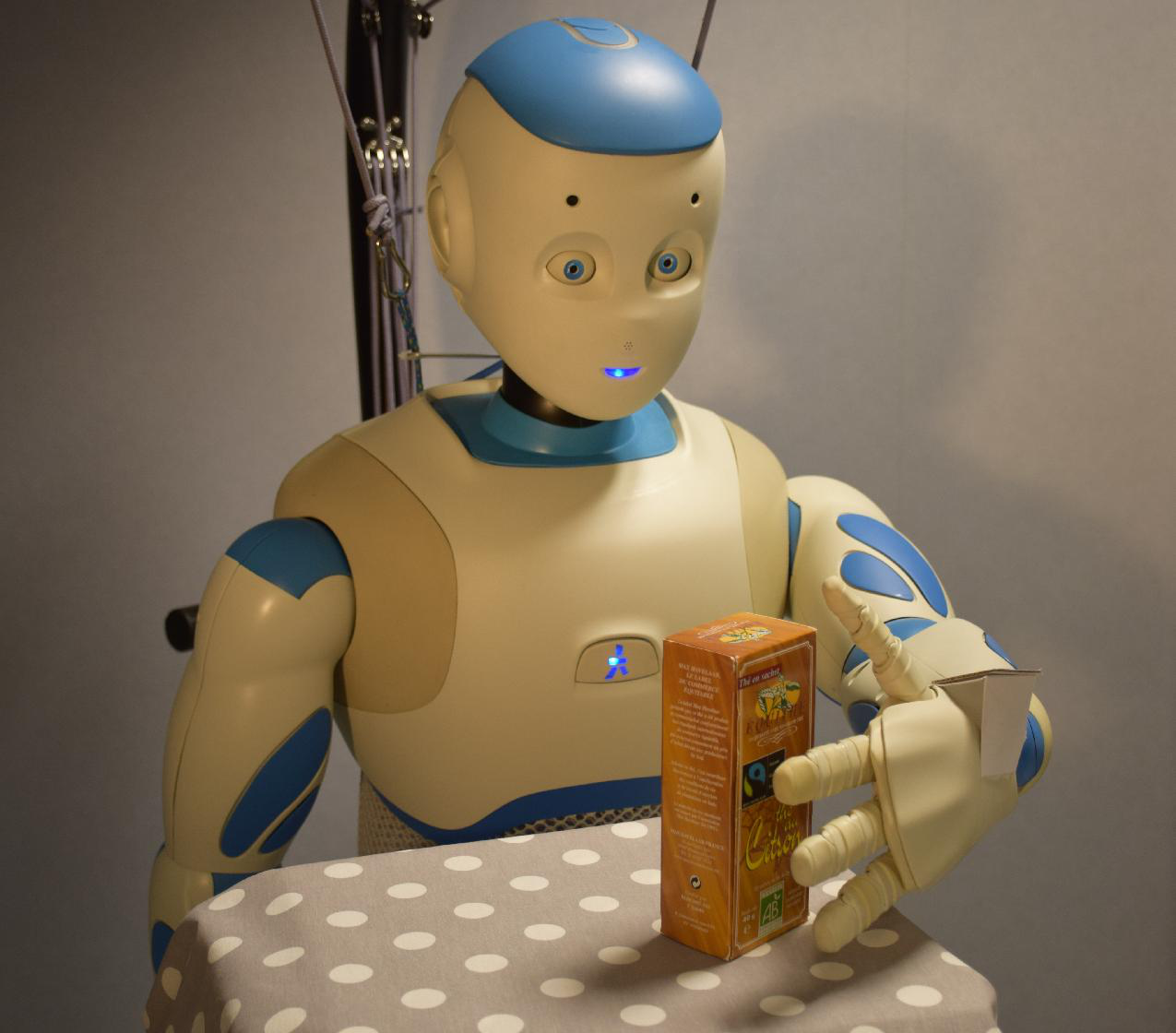

Romeo is a humanoid robot from SoftBank Robotics which is intended to be a genuine personal assistant and companion. Only the upper part of the body (trunk, arms, neck, head, eyes) is working. This research platform is used to validate our researches in visual servoing and visual tracking for object manipulation (see Fig. 6.a).

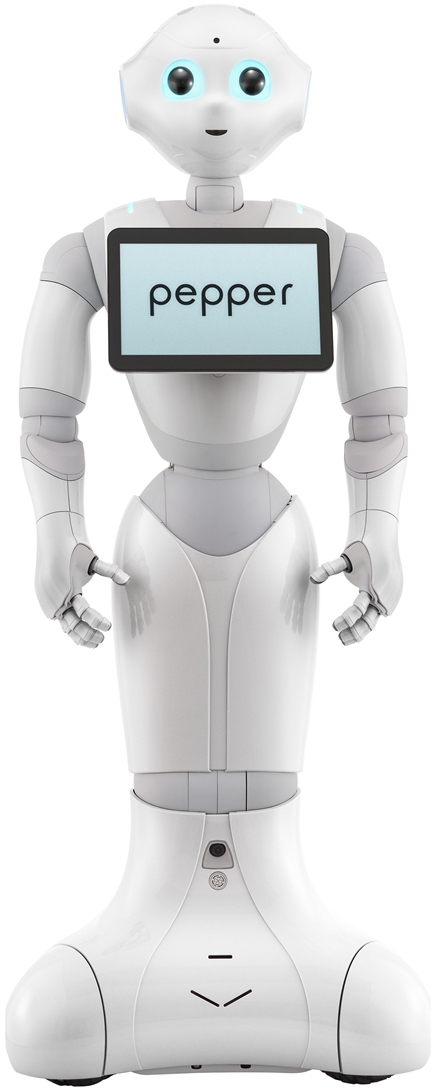

Last year, this platform was extended with Pepper, another human-shaped robot designed by SoftBank Robotics to be a genuine day-to-day companion (see Fig. 6.b). It has 17 DoF mounted on a wheeled holonomic base and a set of sensors (cameras, laser, ultrasound, inertial, microphone) that makes this platform interesting for researches in vision-based manipulation, and visual navigation (see Section 7.5.1).

Note that two papers published this year include experimental results obtained with these platforms [13], [60].

Unmanned Aerial Vehicles (UAVs)

Participants : Thomas Bellavoir, Pol Mordel, Paolo Robuffo Giordano [contact] .

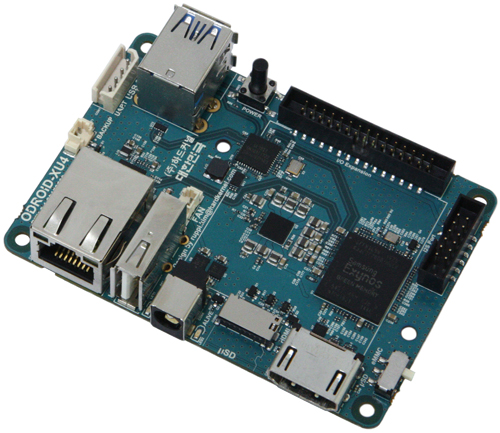

From 2014, Lagadic also started some activities involving perception and control for single and multiple quadrotor UAVs, especially thanks to a grant from “Rennes Métropole” (see Section 9.1.4) and the ANR project “SenseFly” (see Section 9.2.5). To this end, we purchased four quadrotors from Mikrokopter Gmbh, Germany (see Fig. 7.a), and one quadrotor from 3DRobotics, USA (see Fig. 7.b). The Mikrokopter quadrotors have been heavily customized by: reprogramming from scratch the low-level attitude controller onboard the microcontroller of the quadrotors, equipping each quadrotor with an Odroid XU4 board (see Fig. 7.d) running Linux Ubuntu and the TeleKyb software (the middleware used for managing the experiment flows and the communication among the UAVs and the base station), and purchasing the Flea Color USB3 cameras together with the gimbal needed to mount them on the UAVs (see Fig. 7.c). The quadrotor group is used as robotic platforms for testing a number of single and multiple flight control schemes with a special attention on the use of onboard vision as main sensory modality.

This year four papers published enclose experimental results obtained with this platform [49], [50], [42], [62].